Moshi

Moshi is a speech-text foundation model and full-duplex spoken dialogue framework.

Moshi is a cutting-edge, full-duplex spoken dialogue framework leveraging a speech-text foundation model. It uniquely incorporates Mimi, a state-of-the-art streaming neural audio codec, to process audio with remarkable efficiency. Mimi achieves a low latency of 80ms at 1.1 kbps by down sampling 24 kHz audio to 12.5 Hz, outperforming existing non-streaming codecs. Moshi models both the user's audio stream and its own internal "monologue", predicting text tokens of its own speech to enhance the quality of generation. With theoretical latency as low as 160ms and practical latency around 200ms on an L4 GPU, it offers real-time interaction, making it ideal for building highly responsive conversational AI applications.

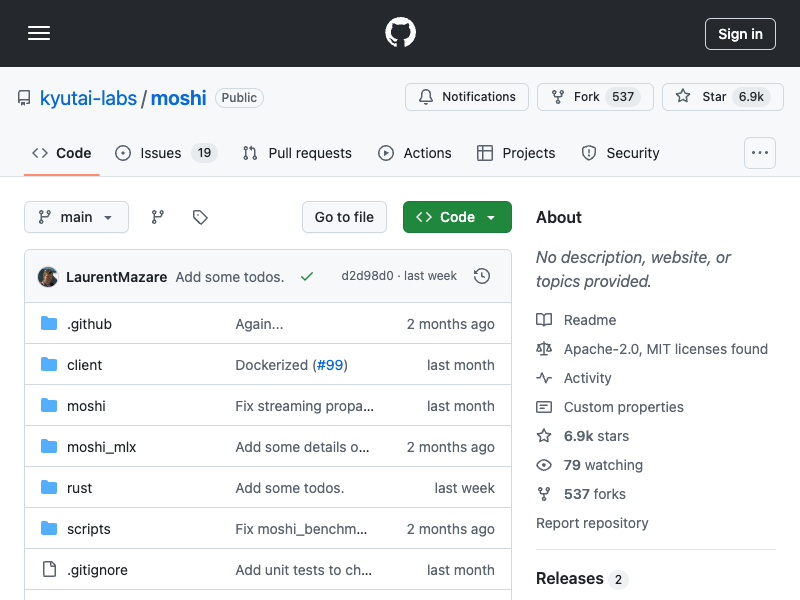

This framework is designed for developers and researchers seeking high-performance, low-latency speech processing. Moshi provides a flexible architecture with PyTorch, MLX, and Rust backends, allowing users to deploy and experiment with various hardware configurations. Its open-source nature and included web UI facilitates rapid prototyping and experimentation, while the availability of a command line client and detailed API documentation allow for easy integration into custom workflows. Furthermore, the code includes a Gradio demo and clear instructions for local deployment, including echo cancellation. Moshi provides a foundation for advanced, conversational AI development, and its modular design allows for further customization.